From data to information

Despite the rapid pace of digitization, reading books is still part of their lives for many people. Especially after a stressful day or on vacation you can relax by reading. You have a book in your hand, read a piece again, digress with your thoughts or are very concentrated. No matter which access one has to reading books – it is in any case about data processing. Once you can read it is very simple and takes the mental space of relaxation to extreme focus. The brain has to provide unimaginable computing power, but you can not feel it.

When we think about data, we usually come up with statistics on social developments, stock developments, health developments, etc. Mostly one has there very strictly structured data in the head which are then developed in the further processing to statistics. So we want to know very reliably how the stocks in our portfolio are developing, what health risks we are entering through smoking or where the most beautiful holiday destinations are.

Apropo Urlaub: If we are on a beach and hear the sound of the waves – could it be data then? In the old days, even before computer technology, the noise of the waves was called “noise”, which is exactly the opposite of data. But if one considers that the acoustics of rushing waves are also relaxing, just like reading books, then one would also have to call this experience data processing. The same thing happens when you look into a log fire, look at the irregular flames of the flames and thus come in a relaxed state. First of all, we have to go through a definition and description of what we call data. Data is generated from signals that originate from the respective environment. The best known are the electromagnetic spectrum with visible light, gravity, pressure and sound waves, radioactive radiation, and much more.

These natural signals all have a steady course, i. they have an infinitely small gradation. Such signals exist. If you have a sensor for this, data can be obtained from it. The biological person has eyes, ears, skin, etc. and can convert signals such as light, noise and pressure into data. At this point, it is very important to realize that this is just data and no information has been generated yet. So we are dealing with a three-stage processing process. This consists of the signal processing, the conversion of the data and the subsequent algorithmic processing for information.

So far, people have only been able to access the data for which they also have appropriate sensors – eyes, ears, nose, etc. We have no direct access to all other environmental signals. This requires transformation. A very striking example of this is the radioactivity. This we do not feel at the time of occurrence by human sensors. It was only the invention of the Geiger counter that gave us indirect access to these signals. For the time being, the signals that the universe otherwise makes available to us are beyond our knowledge, because we know nothing about them and can not build transformative sensors for them.

What drives man is his ambition to understand the world. The natural environment is messy and complicated. The human brain has learned to handle it. The computer world is a very neat, organized and simple facility. Simple does not mean easy at the same time. A special drive has been given to human curiosity in the Renaissance and empirical science. This way of exploring the world is data driven. The researchers make experiments and collect data which is subsequently evaluated, ie processed into information. We are looking for answers on how the world works. The more data we have, the more we realize that it contains errors. For very large amounts of data, this hardly plays a role. For smaller data sets, this can lead to completely wrong conclusions. In reality, nothing is right or wrong. Ultimately, it’s all about the assignment of meaning. Why are things the way they are?

Equipped with these findings, we would have two access possibilities to question nature:

- Problem driven: Searching for data to solve this problem.

- Data driven: what problems could be solved with this data?

A data-driven research project was the decoding of the human genome. The aim was to sequence and record the human gene in its entirety. After that, nowadays, you can look at this data and solve problems such as personal medication, genetic health risks, etc. A similarly data-driven phenomenon is evident in the telescope of the universe. Humanity has many questions that can not even be formulated. So here too the approach with Hubble telescopes or the like to categorize the universe. The problem-oriented approach disappears rapidly – but was in the pioneer days of inventors the ups and downs. (see Thomas Alva Edison)

The world itself is a really interesting place. I want to know what I can learn from it. For that I have to decide what I really want to know and ultimately it’s about meanings. Data in themselves are meaningless, but have different manifestations.

Basically, a distinction must be made between structured and unstructured data. The structured approach includes data that is usually arranged in rows and columns with corresponding column names and line numbers – each Excel sheet is structured this way. By contrast, unstructured data has no linear inner relationship. These include just the above-mentioned books, videos, music, etc.

To understand unstructured data, we need to know about their encoding, otherwise they will remain completely inaccessible to us. If we did not know what “A” means, we could not do anything with it. Coding is thus a way of assigning meaning. Musicians and composers have found a different form of coding – they use musical notes. A sheet of music is only understandable if one recognizes the meaning of the individual notes. In computer technology, the binary coding has prevailed. This method is so interesting because this code 0,1 is recognized both by the machine and by humans. If it is very difficult to read a native computer code in binary form – but it would not be excluded.

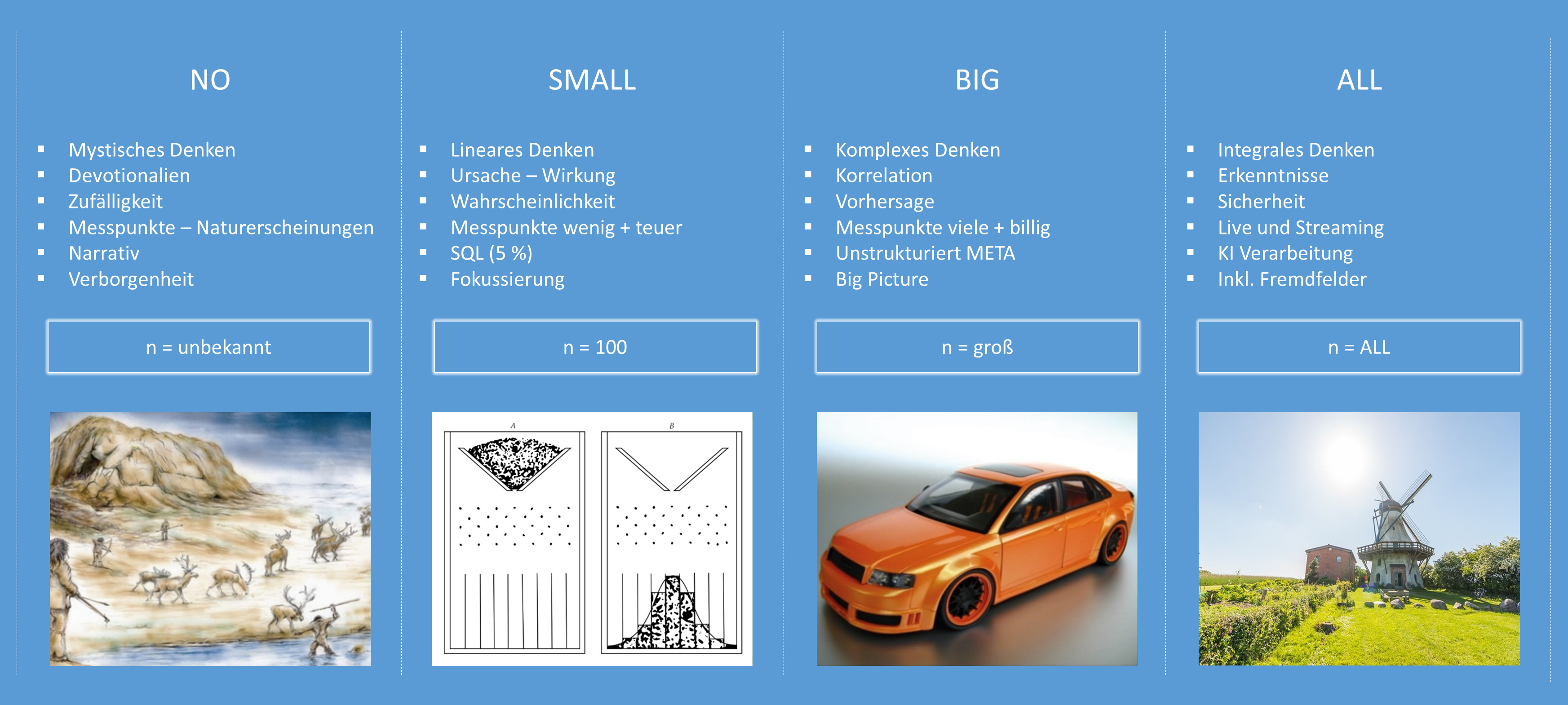

The concept of big data has emerged in recent years because it is possible with storage technology to store unimaginably large amounts of data. How big “big data” really is you can not say because it changes constantly. The lowest limit is likely to be in the range of one terabyte. Petabyte and Exabyte storage technologies are in production or already available. In addition to the Big Datas, there is also the world of No Data, Small Data and All Data. (see picture)

No Data: According to common sense, there is not really that. As already described above – if there is no sensor, then there is no data. The North American Natives were reliant on finding the caribou herds as reliably as possible in their living environment – the icy desert. Only then were they able to ensure their survival. However, they had no sensors for it. You could not see, smell or hear the animals over the huge land areas. Nevertheless, they had to decide in which direction they should go for a hunt. The decision could have been made by chance. They could have questioned one of their gods or could rely on narrative accounts. In fact, they have built a gauge for the directional decision. This is composed of a shoulder bone and a rib of a caribou. In combination it was something similar to a roulette – one turned the rib bone and where he stopped was the direction in which caribou should be. With this methodology, they have nevertheless created data and information from No Data. Probably the reliability was as great as the coincidence.

Small Data: In times of scientific pioneers and the subsequent industrialization, data was always in short supply. Mainly, data has been obtained by logging experiments. It was also the time of linear thinking with the economic model of cause-and-effect. You could do a market survey, based on a small sample, with very high probabilities. Statistics and probability calculations are the central elements of Small Data. The measuring points were little and very expensive.

Big Data: Developments in the IT sector led to the storage of unimaginably large amounts of data. In a first phase, these were created by login algorithms of operating systems. If a computer logs its own workload and the processor clocks in the gigahertz range, then one can roughly imagine what amounts of data this machine will produce. At the same time, the development of sensors has helped to provide environmental data in streaming form. Well known are weather stations. In the meantime, airplanes that need such equipment will be used. With these large amounts of data, it has been recognized that the earlier cause-and-effect relationship could suddenly have another meaning. The complex thinking was born with it. Meyer-Schönherr has described in his book “Big Data” the phenomenon of orange cars. According to this, owners of cars with this color must visit the workshop more often than anyone else. That’s a fact. However, no cause-effect relationship can be identified yet. The linear thinking gradually turns into the thinking of correlations. The processing of large amounts of data is only just beginning. The arrival of the IoT with planned 30 billion devices by 2020 will lead to exponential growth here. Above all, it will be the live data that these devices produce. If the networking and the underlying computers are powerful enough, we will come to completely new information and knowledge about the world. The change from the “Death Data to Life Data” is currently going on.

All Data: Like no “No Data”, there is no All Data. Signals from the environment have a steady course, i. one can always determine another measuring point between two measuring points. In turn, infinity is given. Natural values such as temperature, pressure and speed could be measured, at least theoretically, with infinite precision. But this infinity would also require infinitely large memory at the same time. If one wants to measure and store the entire universe, one would need exactly this universe as a storage medium and the same amount of energy to process this as well. Ray Kurzweil came to this conclusion in his reflections on memory and computer capacity.

As technology evolves along the lines of today’s pattern, we can see a move from Big Data to All Data, driven by IoT aspirations and capacity expansions of storage devices. In some areas a complete data collection is already developed. Especially when it comes to quantitative, so countable, sizes. Modern mill plants grinding the tons of grain into flour every day look at each grain in terms of its quality. The bad ones are shot out with a compressed air jet. The data of all cereal grains are recorded. In contrast, the data of 7 billion people are almost disappearing.

Deutsch

Deutsch English

English